Case Study: Wearable Tech powered by Docker and Triton

May 09, 2017 - by Luc Juggery, Co-Founder, TRAXxs

Introduction

High-quality IoT apps can be challenging to build and scale with traditional monolithic services architectures. The backends serving millions of device end points are often plagued by server-side concurrency bottlenecks generated by synchronous server threads. Microservices built with Node.js are being used to solve this design problem, primarily due to its asynchronous event-based architecture and high concurrency characteristics.

Deploying these microservices to Docker containers is turning out to be the perfect and most popular way to scale these lightweight backend APIs.

In this case study, we showcase a path breaking solution from TRAXxs, a fabless startup developing wearable technology for shoes to enable value added services of health, communication, et al. The solution uses Node.js and containers as the leading technologies, and is deployed on Joyent's Triton Container Native Cloud for high performance and cost optimization.

Overview

At the end of 2016, TRAXxs was selected to be part of Joyent's Node.js & Docker Innovator Program. We, at TRAXxs, are really glad to be part of it as Node.js and Docker are two technologies we really really love working with.

The on-boarding program started with a workshop dedicated to the creation of an application composed of Node.js micro-services. The application was then deployed to Triton Public Cloud from Joyent using Docker Compose. I highly recommend you have a look at this workshop as it illustrated the interesting use case of an IoT application and introduced several Node.js libraries that are worth a look. The innovator workshop is available on Joyent's GitHub repository .

For TRAXxs, it was a really great way to discover Triton, that we had obviously heard about before but never used.

TRAXxs: who we are

TRAXxs is a fabless startup developing wearable technology for shoes, building hardware products and software platforms. Its solutions combine geolocation, telecommunication, and activity monitoring with comfort insoles, offering the opportunity to transform any type of shoe into a real-time GPS tracking solution, which works without any additional device.

TRAXxs’ XSole is a smart insole with embedded sensors, geolocation, and telecommunication chips. Exclusive architecture based on movement detection and innovative passive antennas optimize power consumption.These brand new patented products are low cost and robust integrated devices.

The offering is completed by a set of value-added services, allowing subscribers to monitor in real time the location of the equipped shoe through secured applications and APIs developed with proven technologies.

TRAXxs currently addresses security, precisely the Lone Worker Protection, and helps companies ensure the security of their employees.

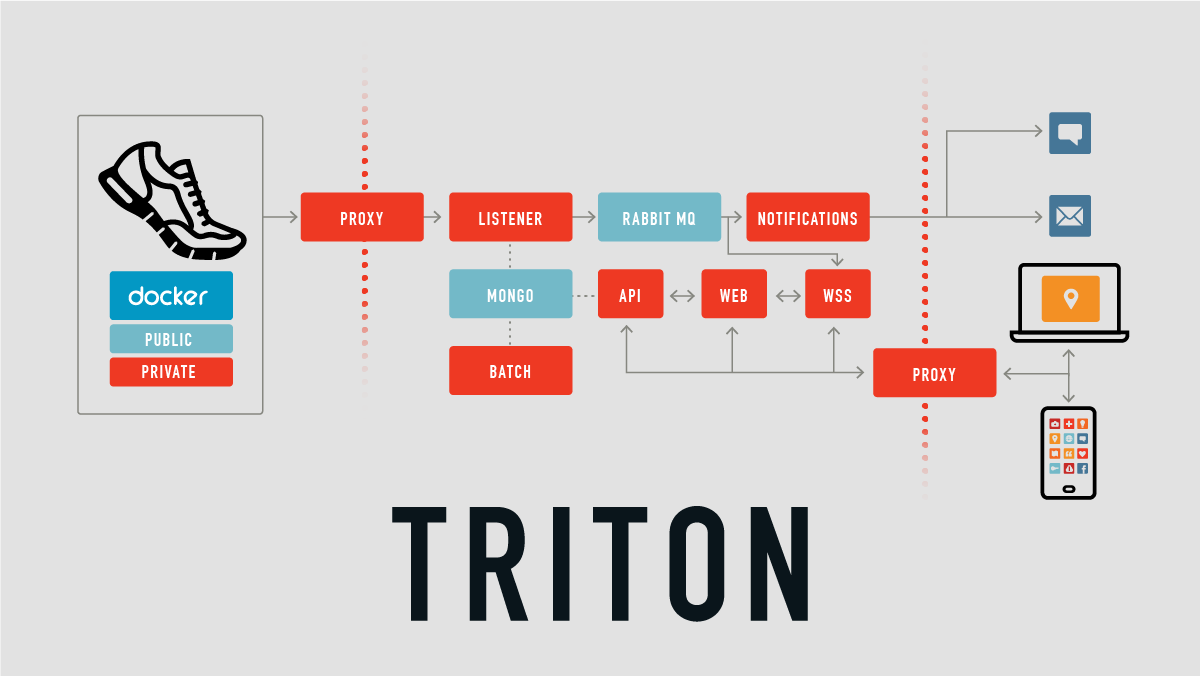

Our application

To handle data coming from the XSole devices, the application’s backend is composed of several micro-services. The picture below illustrates the overall architecture. Not all of our services, nor all of the interactions between them, are represented.

There are two ways data is sent to the application.

Data transfer is initiated by the devices

Devices send positional data and some other specific information to the listener through a reverse-proxy. The listener analyzes, parses, and stores the computed messages in the underlying MongoDB database. Based on its content, the message is pushed to a message queue and handled by the notification and websocket services. The notification service sends an alert via email and sms, while the websocket service pushes data to web and mobile clients.

Data transfer is initiated by the users

Users get access to a dashboard on the web and mobile applications. Both clients use the API that retrieves data from the underlying database.

Each service is created with its own Docker image, either developed internally or available on the Docker Hub. The construction of the whole application is handled by Docker Compose in a docker-compose.yml file that looks like the following (specific environment variables and options have been removed for clarity):

version: '2.1'

services:

# Data store

db:

image: mongo:3.2

volumes:

- mongo-data:/data/db

restart: always

# Session store

kv:

image: redis:3.0.7-alpine

volumes:

- redis-data:/data

restart: always

# RabbitMQ server

mq:

image: 'rabbitmq:3.6'

restart: always

# Listener

listener:

image: traxxs/listener:develop

restart: always

# API

api:

image: traxxs/api:develop

restart: always

# Web front-end

web:

image: traxxs/web:develop

restart: always

# SMS / Email notifications

notifications:

image: traxxs/notifications:develop

restart: always

# Authn

authn:

image: traxxs/authn:develop

restart: always

# WebSocket

wss:

image: traxxs/wss:develop

restart: always

# Proxy

proxy:

build: traxxs/proxy:develop

restart: always

ports:

- "8000:8000" # web

- "8002:8002" # api

- "9000:9000" # listener tcp

volumes:

mongo-data:

redis-data:Note: all the images are tagged with develop as this file is a staging version.

The content of docker-compose.yml is pretty simple. Let’s see how to deploy it.

Deployment on Triton

From a development environment, we first need to configure the Docker client so it targets Triton’s environment.

eval $(triton env --docker luc-ams)Note: luc-ams is a profile created on the datacenter located in Amsterdam. As TRAXxs is located in Sophia-Antipolis (in southeastern France), this datacenter is the closest to our clients.

Once the local client targets Triton, we can verify the Docker version used.

$ docker version

Client:

Version: 17.03.0-ce

API version: 1.24 (downgraded from 1.26)

Go version: go1.7.5

Git commit: 60ccb22

Built: Thu Feb 23 10:40:59 2017

OS/Arch: darwin/amd64

Server:

Version: 1.9.0

API version: 1.21 (minimum version )

Go version: node4.6.1

Git commit: 630008d

Built: Fri Feb 24 00:33:46 2017

OS/Arch: solaris/i386

Experimental: falseThe client’s version is 17.03.0-ce on a darwin/amd64 architecture, as the Docker CLI is running on a MacBook Pro. Regarding the server, as the Go version indicates (node 0.10.48), Triton does not run any Go Docker Engine but instead implements the Docker Remote API.

This means the Docker CLI (running locally) sends the same HTTP requests to Triton’s implementation of Docker’s Remote API as it would do to a real Docker engine. Behind the hood, Triton creates containers using its own technology (SmartOS Zones) instead of Docker containers.

When issuing Docker commands from the local CLI, Triton appears like a regular Docker host. From the outside it looks like one big Docker host. Also, as everything is abstracted via Triton and SmartOS, there is no need to manage several hosts to setup a cluster.

Notes:

- Some features of the Docker Remote API are not implemented in Triton because they are handled by its own underlying mechanisms (services, networks)

- Bryan Cantrill did a great presentation of this Docker killer feature

Because Docker moves fast and Solaris Zones handles containers differently, not all features of the Docker remote API are implemented in the Triton Elastic Docker host. For those reasons there are a couple of things that need to be modified in the original docker-compose.yml file so the application runs on Triton. Honestly, it is really a straightforward process.

In the current implementation of the Docker Remote API, the service discovery needs to be done using the links mechanism within the docker-compose.yml file as there is no Docker embedded DNS server. If we take the examples of the listener and the API services, the changes are the following:

# Listener

listener:

image: traxxs/listener:develop

links:

- db:db

- kv:kv

- mq:mq

network_mode: bridge

restart: always

# API

api:

image: traxxs/api:develop

links:

- db:db

network_mode: bridge

restart: alwaysAs the network implementation is different on Triton, we specified the bridge network_mode for the containers to communicate together. Those changes, done for each service, were the only necessary steps taken to have the application running on Triton.

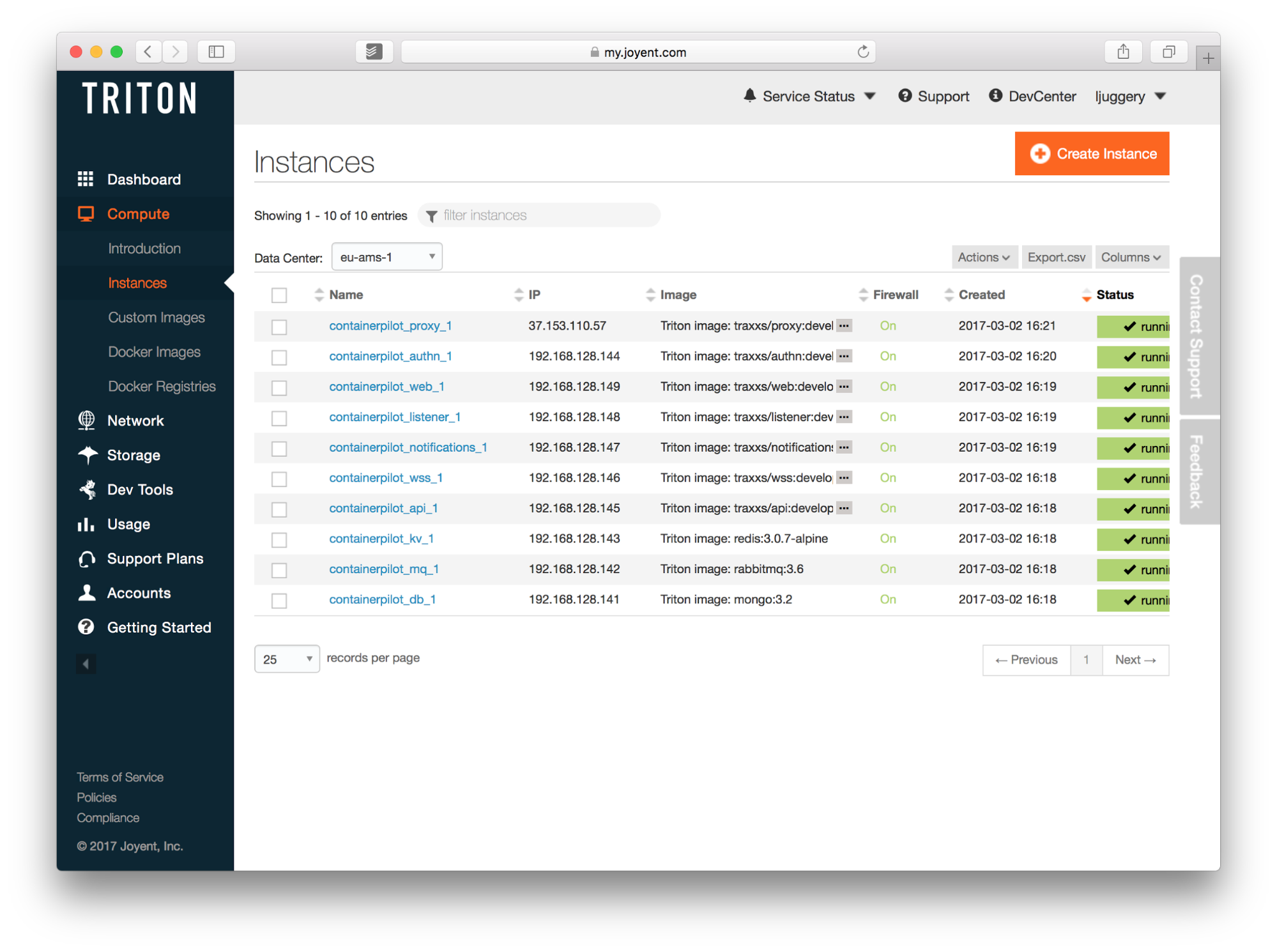

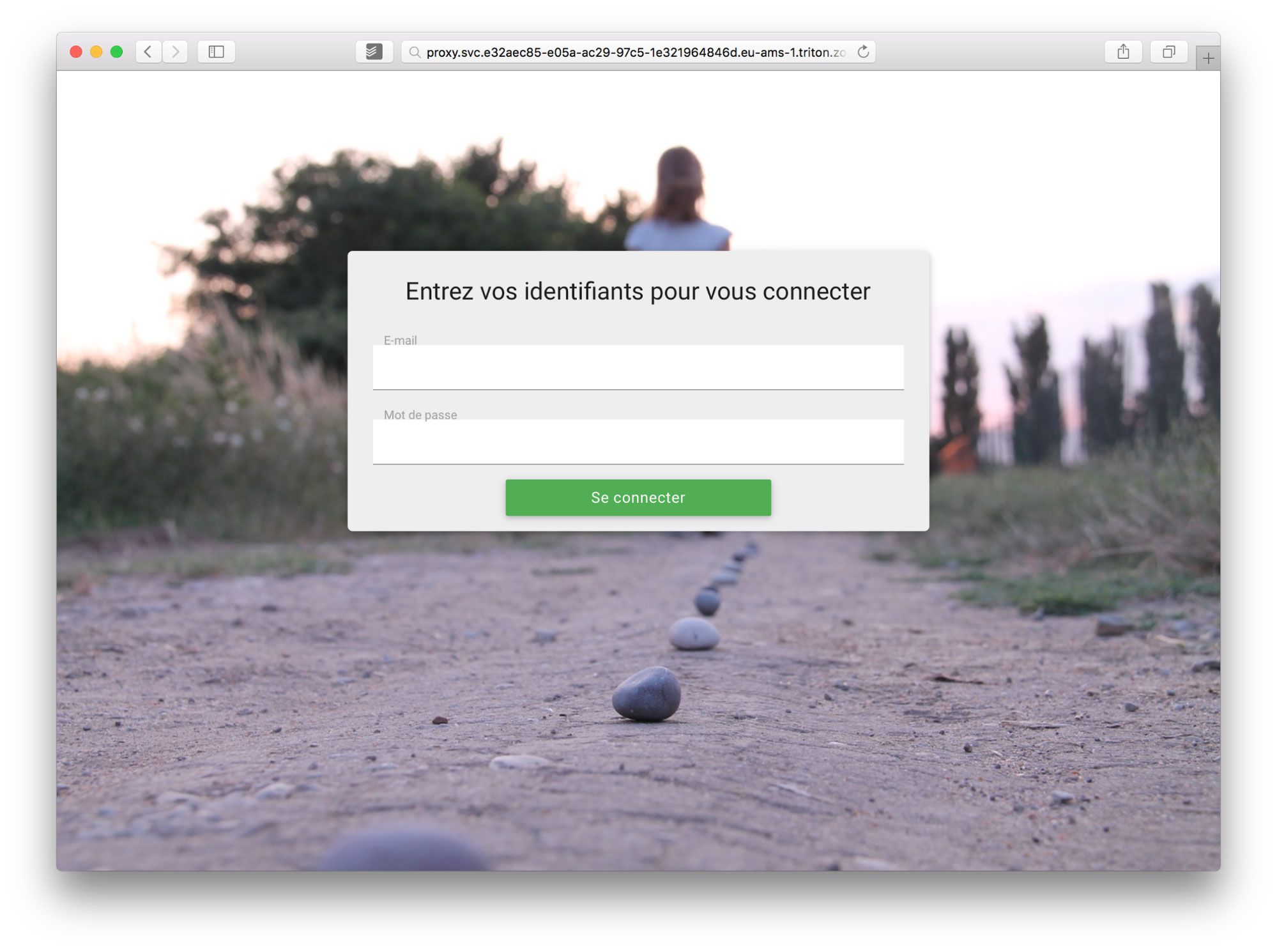

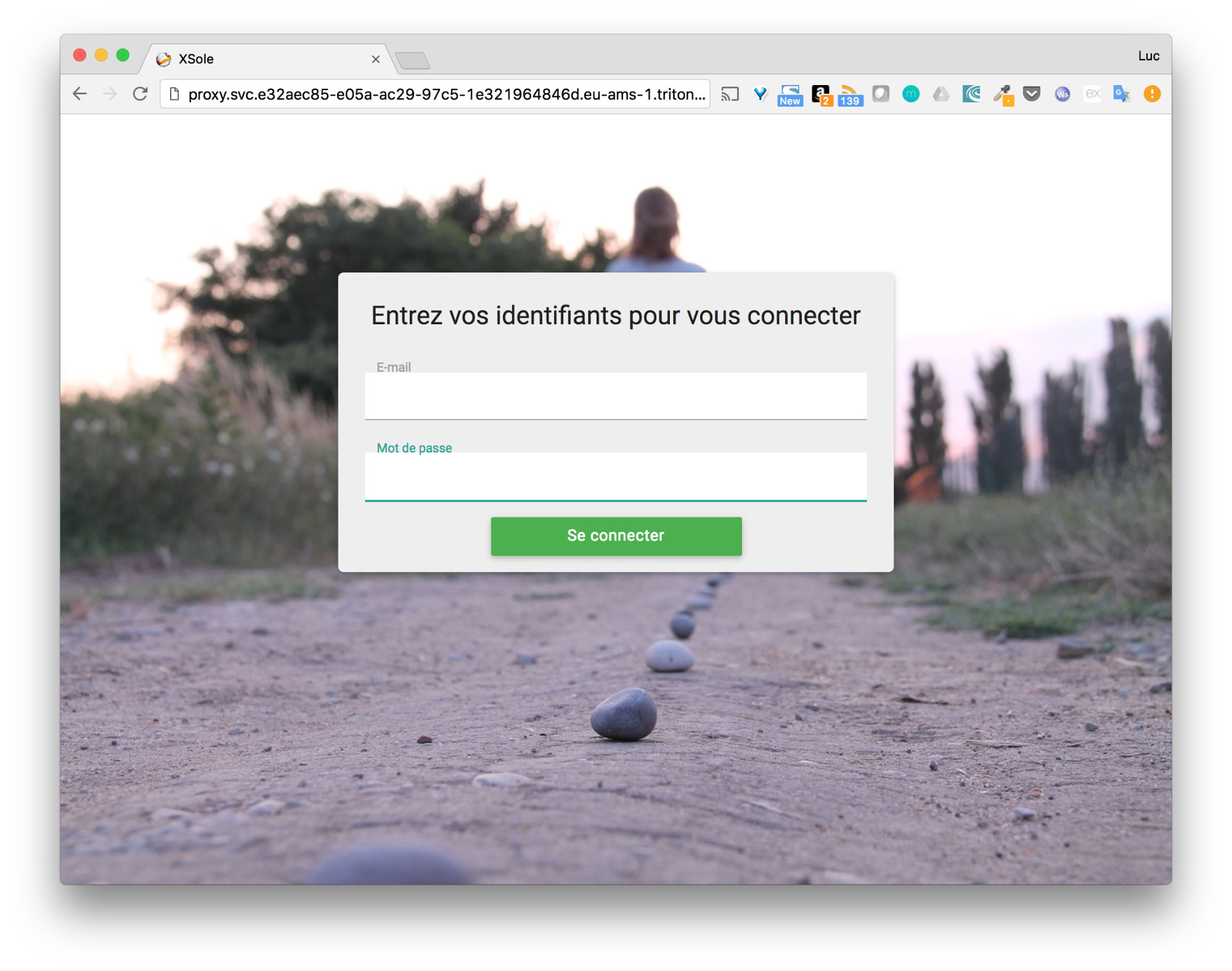

Using the CNS provided in the proxy service, we have access to the web front-end.

Running TRAXxs application with the Autopilot Pattern

As we’ve seen previously, moving the application onto Triton is a simple process. On top of that, the administration interface works fine and is really user-friendly. Running the same application using the Autopilot Pattern with Joyent’s ContainerPilot will be even better. ContainerPilot will allow us to move the orchestration tasks to within the application itself. Several changes need to be done in order to do this, and we will illustrate the approach with the API service of TRAXxs’ application. This does not require a lot of work, especially when dealing with microservices application written in Node.js.

Adding a Consul server

Consul is a product made by Hashicorp, used for discovering and configuring services in an infrastructure. It provides several key features:

- Service discovery

- Health check

- Key/value store

- An ability to scale to multiple datacenters

In the present case, it is used is used to keep the global state of the application and can be added with the following configuration in the docker-compose.yml file:

consul:

image: autopilotpattern/consul:latest

restart: always

dns:

- 127.0.0.1

labels:

— triton.cns.services=consul

ports:

- “8500:8500”

command: >

/usr/local/bin/containerpilot

/bin/consul agent -server

-config-dir=/etc/consul

-bootstrap-expect 1

-ui-dir /uiThis service will run Consul as a server. Each service will have their own embedded Consul client that communicates with the server.

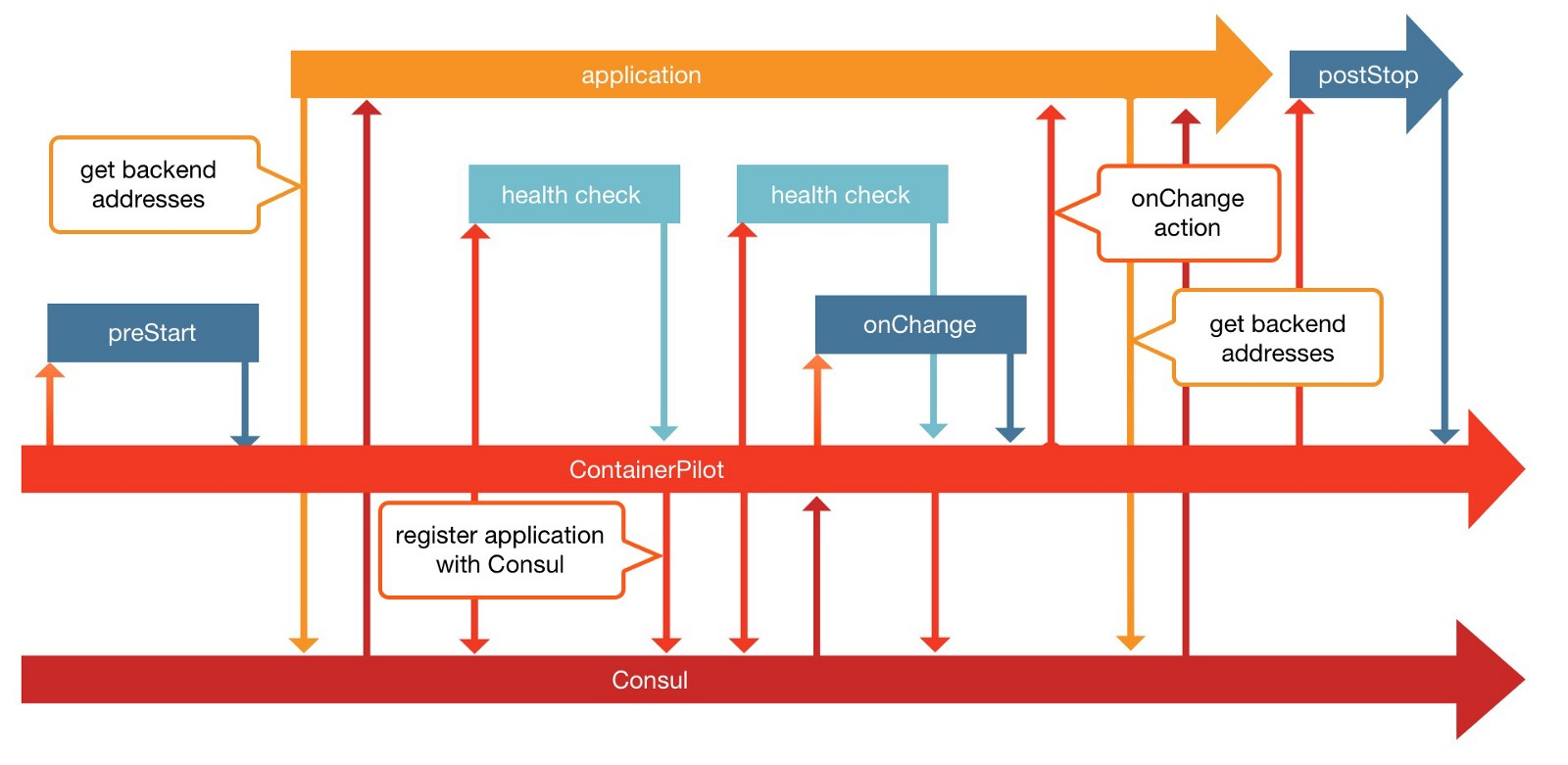

Adding ContainerPilot to the each service

ContainerPilot will be added to each of the application’s service. It is composed of the containerpilot.json configuration file and the binary that communicates with the application’s service on one side with Consul on the other side. ContainerPilot is in charge of:

- Service registration within Consul

- Defining how the health check of the service needs to be done

- Defining the dependencies of the current service

- Managing the service workflow by calling configuration script for

preStartandonChangeactions

The picture below gives a high level view of the interaction between the application, ContainerPilot and Consul.

The following will illustrate the changes made with the API service. Similar changes will be made in the other services as well.

Note: among the services which compose the application, db, kv, and mq are based on existing images (not developed by TRAXxs): MongoDB, Redis and RabbitMQ. Instead of adding ContainerPilot manually in those services, we have used the image already packaged by Joyent available in the GitHub Autopilot Pattern repository. RabbitMQ is not packaged yet but it will probably be soon. Adding ContainerPilot to a service is done in several steps that are listed below.

Embedding a Consul agent

This code is for communication with the Consul server. The consul agent is added into the Dockerfile of the service:

# Install consul

RUN export CONSUL_VERSION=0.7.2 \

&& export CONSUL_CHECKSUM=aa97f4e5a552d986b2a36d48fdc3a4a909463e7de5f726f3c5a89b8a1be74a58 \

&& curl --retry 7 --fail -vo /tmp/consul.zip "https://releases.hashicorp.com/consul/${CONSUL_VERSION}/consul_${CONSUL_VERSION}_linux_amd64.zip" \

&& echo "${CONSUL_CHECKSUM} /tmp/consul.zip" | sha256sum -c \

&& unzip /tmp/consul -d /usr/local/bin \

&& rm /tmp/consul.zip \

&& mkdir /configAdding a ContainerPilot configuration file

This file defines the action to be taken when a services starts, how the health check needs to be done, listing the dependencies with the other services, and defining how the service should react if an event occurs with one of those dependencies. For the API, the containerpilot.json looks like the following.

{

"consul": "localhost:8500",

"preStart": "/app/manage.sh prestart",

"services": [

{

"name": "api",

"health": "/usr/bin/curl -o /dev/null --fail -s http://localhost/health",

"poll": 3,

"ttl": 10,

"port": 80

}

],

"coprocesses": [

{

"command": ["/usr/local/bin/consul", "agent",

"-data-dir=/data",

"-config-dir=/config",

"-rejoin",

"-retry-join", "{{ if .CONSUL }}{{ .CONSUL }}consul",

"-retry-max", "10",

"-retry-interval", "10s"],

"restarts": "unlimited"

}

],

"backends": [

{

"name": "mongodb-replicaset",

"poll": 3,

"onChange": "/app/manage.sh db-change"

}

]

}Let’s detail the keys defined in this file:

consulindicates the location of the Consul server which the container will connect to. It is defined aslocalhostas it targets a Consul agent running locally. This agent is in charge of the health check of the local service and it communicates with the consul service defined previously.serviceslists the services this container provides to other containers. In this case, only theapiservice is exposed. It also defines the health check command that verifies the status of the service and the frequency of the poll, as well as the number of seconds to wait before considering the service unhealthy.coprocesseslists the processes that run with the current services. In our example, a Consul agent is running in our container. The command to run this agent uses theconsulstring (unless environment variable defines another value). This string can be used because of the link within the Docker Compose file. The agent running in each container is connected to theconsulservice of our application.backendslists the other services which the defined service depends on. In our example, theapiservice only depends on thedbservice to run properly.

An additional script, manage.sh, is used here in order to handle the start of the service and the database service’s events. This script is really minimal in the current version.

#!/bin/sh

if [ "$event" = "prestart" ];then

while [[ "$(curl -s http://${CONSUL}:8500/v1/health/service/mongodb-replicaset | grep passing)" = "" ]]

do

echo "db is not yet healthly..."

sleep 5

done

echo "db is healthly, moving on..."

exit 0

fi

# If db not accessible anymore, restart the api service

if [ "$event" = "db-change" ];then

echo "DB-EVENT"

pkill -SIGHUP node

fiWhen a preStart event is received, it waits until the database becomes registered in Consul. When a db-change event is received, a SIGHUP signal is sent to the service so it can trigger a reconnection to the database.

Modifying the service code

You have to modify the service code in order to catch the event sent by ContainerPilot. In the case of the api service which only depends on db, a reconnection to the database will be triggered if an event occurs at the database level.

As the API was based on the Sails framework, there was not a lot we could do to force a reconnection. Sails is a great framework based on Express and Socket.io. Sails is the Ruby On Rails for the Node.js world, but it hides too much internal work from the developer. Migrating the API to Express was not only needed to use ContainerPilot, but it was a move we already planned to do, so it was a great fit.

Once this was done, we used Joyent's Piloted npm module that eases the process of catching events from backend. Basically, the following code was added to the api in order to reconnect to the database each time the configuration of the underlying database is changed in Consul.

// Manage connection to the underlying database (connection + reload)

Piloted.config(ContainerPilot, (err) => {

// Reconnect when ContainerPilot reloads its configuration

Piloted.on('refresh', () => {

db.reconnect(function(err) {

if(err) {

winston.error("DB reconnection:" + err.message);

}

});

});

});Embedding the ContainerPilot binary

In order for everything to be put into action, the ContainerPilot binary is added in the Dockerfile of the service and defined as the entrypoint of the Docker image. When started, it will load the containerpilot.json file and run the service’s init script.

# Install ContainerPilot

ENV CONTAINERPILOT_VERSION 2.6.0

RUN export CP_SHA1=c1bcd137fadd26ca2998eec192d04c08f62beb1f \

&& curl -Lso /tmp/containerpilot.tar.gz \

"https://github.com/joyent/containerpilot/releases/download/${CONTAINERPILOT_VERSION}/containerpilot-${CONTAINERPILOT_VERSION}.tar.gz" \

&& echo "${CP_SHA1} /tmp/containerpilot.tar.gz" | sha1sum -c \

&& tar zxf /tmp/containerpilot.tar.gz -C /bin \

&& rm /tmp/containerpilot.tar.gz

# COPY ContainerPilot configuration

ENV CONTAINERPILOT_PATH=/etc/containerpilot.json

COPY containerpilot.json ${CONTAINERPILOT_PATH}

ENV CONTAINERPILOT=file://${CONTAINERPILOT_PATH}Putting this all together

The main services (api, listener, web, etc.) have been modified according to the steps described above, taking into account the specificities of each one (mainly the dependent services). The db and kv service (originally based on MongoDB and Redis respectively) now use the images already packaged by Joyent:

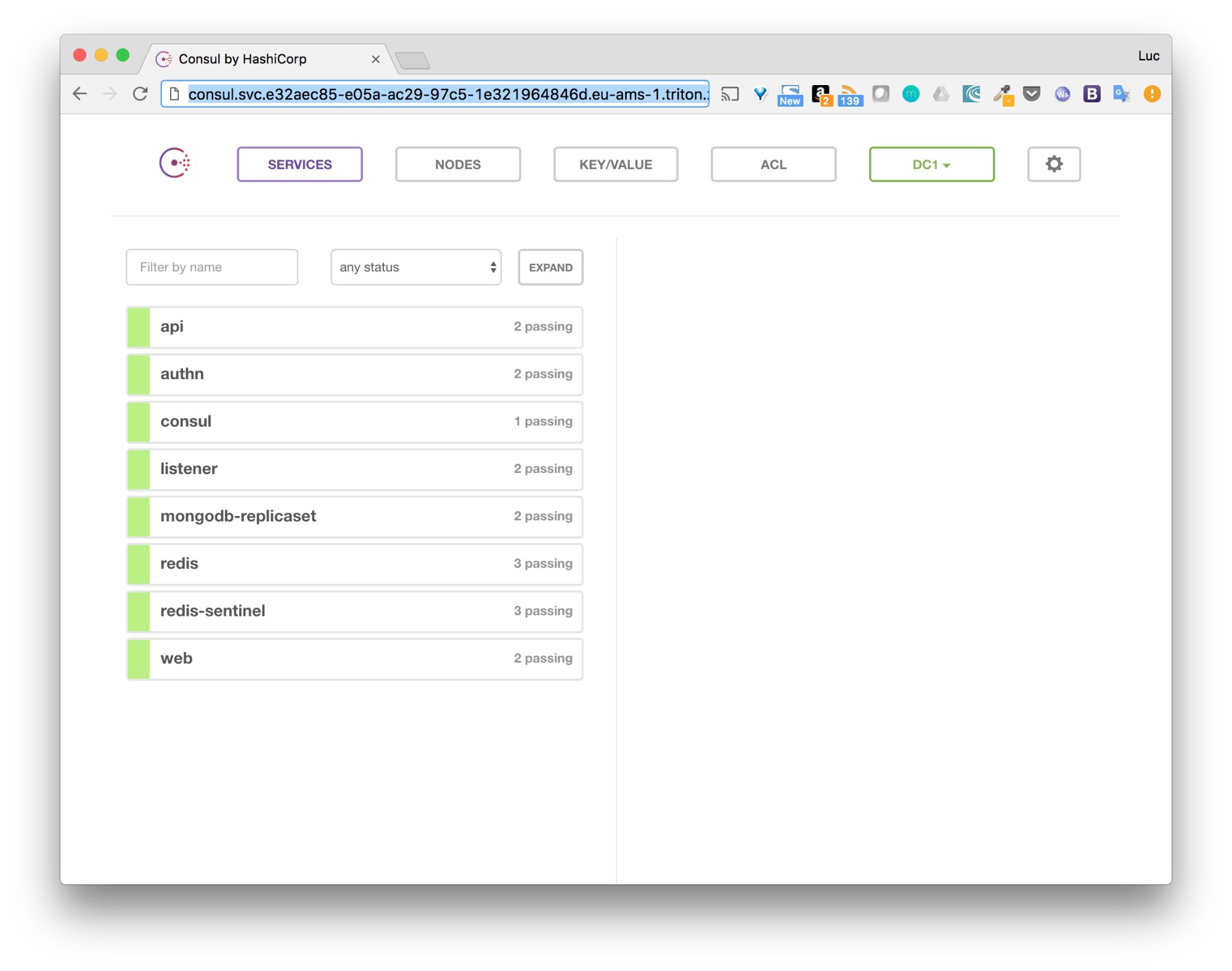

All of the services (except the few ones that have not yet been migrated) can be seen within the Consul’s UI.

The application is available in its ContainerPilot flavor on Triton.

To illustrate how to run the application with ContainerPilot, let’s see what happen if the database goes away.

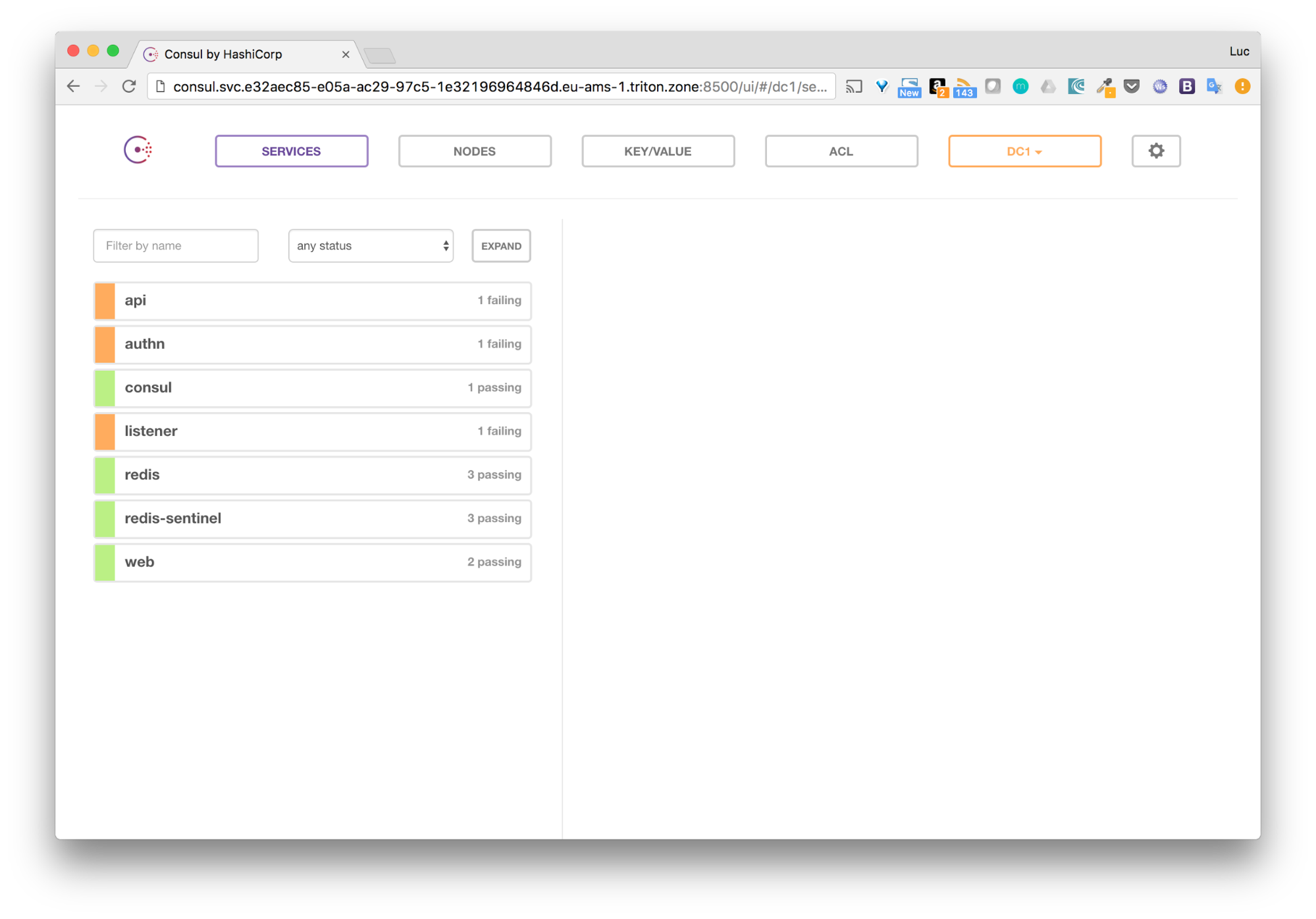

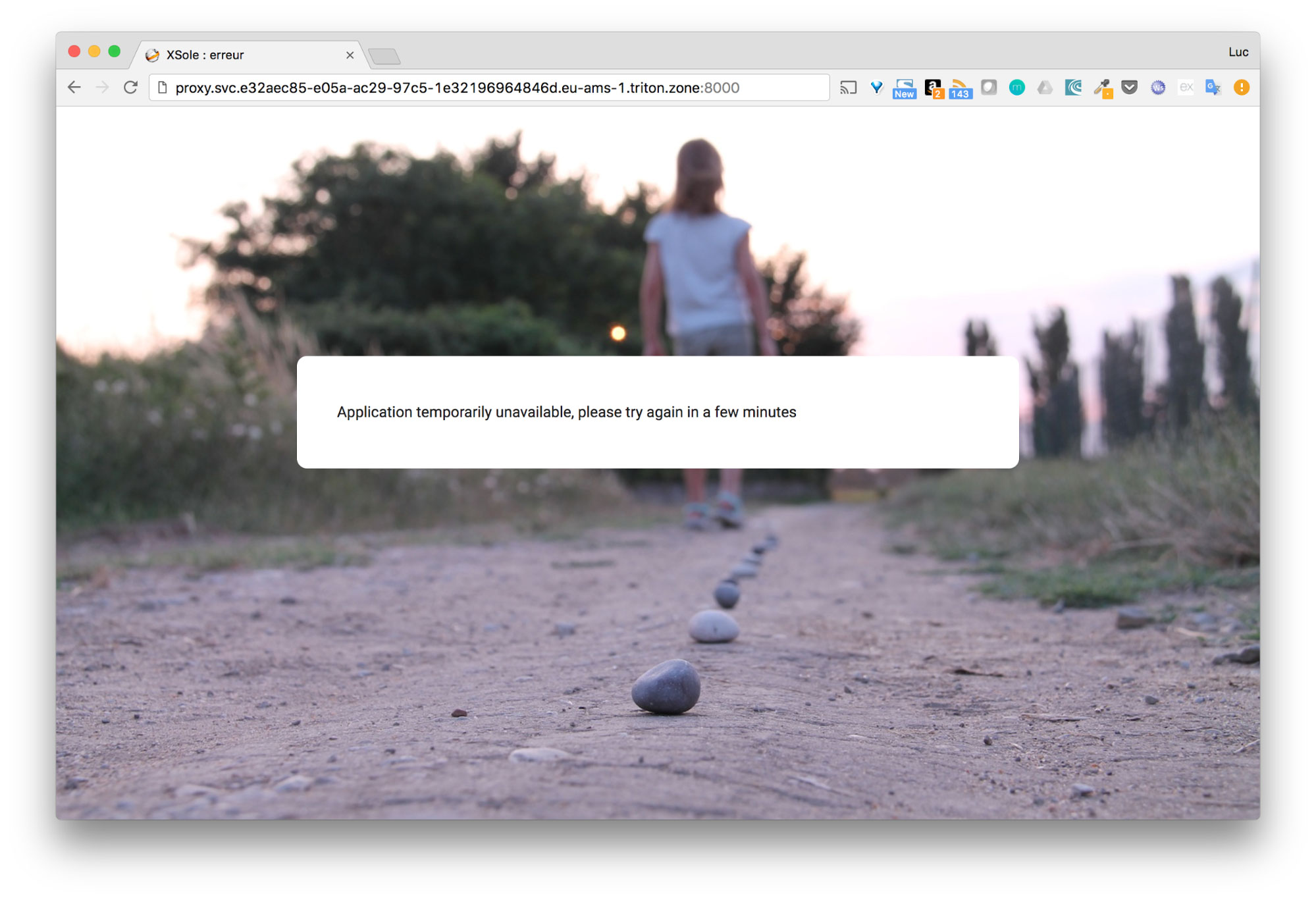

$ docker-compose stop db

The dependant services automatically become unhealthy and the web interface switch to unavailability mode.

Summary

Deploying TRAXxs’ application onto Joyent’s Triton is a smooth process especially for a microservices application with services developed with Node.js. By adding ContainerPilot to the picture, we get an application that can orchestrate itself and that is easier to operate. Currently hosted on AWS, we are in the process of migrating the remaining services of the application to Triton and the object storage to Manta.