Spin up a Docker dev/test environment in 60 minutes or less

July 27, 2015 - by Casey Bisson

I was a Joyent customer before I joined as an employee. I discovered Joyent's infrastructure automation solutions while looking for a hybrid cloud solution that would allow me to run dev and test code in a private environment and production in a public cloud with a global presence. The Triton Elastic Container Infrastructure (formerly SmartDataCenter or SDC7) that powers Joyent's public cloud is open source and available for anybody to install to power private clouds, allowing users to enjoy the same platform everywhere. It's free for use as open source, but enterprises of all sizes take advantage of our commercial support offerings.

Triton is the missing link that automates the management of individual servers to turn a data center into a container-native cloud ready to run applications on bare metal. As a customer, I was using Triton (then SmartDataCenter) on inexpensive commodity servers that I'd co-located in a local data center. Moving dev/test environments out of the public cloud not only reduced my cloud expenses, but also significantly improved the performance and flexibility of those dev/test environments.

Now, as a Joyent employee and product manager for Triton, I run a small data center of my own on a few Intel NUCs on my desk. I still use it for dev/test, but now that includes the Triton software, as well my own projects. The NUCs aren't nearly as fast as the hardware we use in our public cloud, but installation and management is basically the same no matter what kind of hardware you choose.

I should note that though Triton is built to manage huge numbers of servers, we actually do most of our development on our laptops in an environment we call "CoaL," or "cloud on a laptop." That flexibility to work quickly and see everything on our laptops is incredibly valuable for our development process (and reflects our interest in preserving the laptop to cloud workflow for our Docker implementation). So if you just want to see how Triton works, you can start there as well.

Triton runs on commodity hardware, but the difference between what I'm using and what our data centers and most private clouds use is a very long stretch. Here's what I'm using and a comparison to Triton Elastic Container Service in our public cloud:

- Each node is an Intel NUC D54250WYK with a dual core Intel i5 running at 1.3GHz

- Our public cloud Triton nodes each have 24 fast cores (48 with hyperthreading)

- Each has 16GB non-ECC RAM

- We wouldn't use anything but high quality ECC RAM in our public cloud

- Primary storage for each node is a single 500 GB SSD, with no redundancy

- The public cloud uses high speed disks in a RAIDZ2 configuration.

- My portable nodes don't have any write through cache (ZIL SLOG), but our public cloud uses super-fast SSDs. These SSD ZIL SLOGs allow ZFS to re-order random writes to be sequential and optimize the whole storage path for especially high performance.

- Each node has a single Intel gigabit NIC

- Our public cloud nodes have four 10Gb/s NICs, trunked into two physical networks

- I'm using a single ZyXEL GX1900-8 as my top of rack switch, though any switch that will trunk VLANS (including unmanaged switches) will do

- Our public cloud uses dual top of rack switches running at 10Gb/s per port

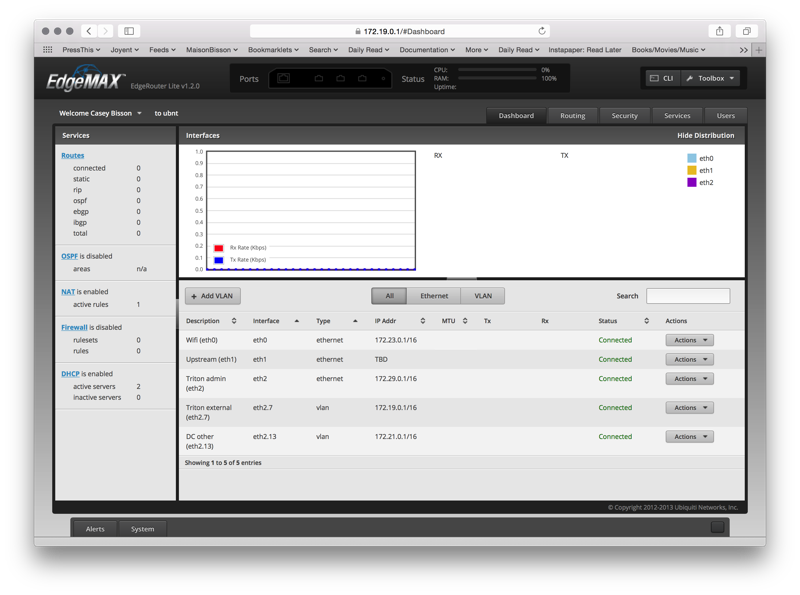

- A Ubuquiti EdgeRouter Lite is my core router, connecting me to the upstream network and routing all traffic among the private networks in my little data center

- You can imagine our public cloud core routers are very sophisticated

Despite the huge differences between the hardware in my private data center and that in our public cloud, I can still test and take advantage of all the features of Triton Elastic Container Infrastructure, just at a smaller scale.

Connecting everything

One of the first problems to solve when trying to herd servers is how to connect them. For Triton, the installation process starts with two layer 2 networks:

- Admin network: used for control communications within a single data center

- External network: used for carrying communications by customers/tenants and for any public internet facing connectivity

It's best to put those two layer 2 networks on separate NICs (or bonded pairs of NICs, as we do in our public cloud), but the NUCs only have a single ethernet NIC. So I'm running the external network on a VLAN and the admin network untagged all on the same interface. Triton requires an untagged VLAN for the admin network, in part to support PXE booting the nodes.

On those two layer 2 networks we typically have a few layer 3 IP networks:

- Admin network: used for control communications within a single data center; this IP network is carried on the untagged admin VLAN described above

- Private customer network(s) for interconnecting containers; these can be shared IP spaces visible to all customers (and carried on the tagged external VLAN described above), or these can be private IP spaces for each customer (see the VXLAN discussion later)

- Internet-facing network(s) for exposing customer containers and Triton services publicly; this network is carried on the tagged "external" VLAN described above

Because my data center is completely private, I'm not creating that last network.

Caveat: this description of networking is focused on the initial installation and omits discussion VXLAN/network fabrics. For now, VXLAN/network overlay setup is done as a post-install configuration step I'll discuss later.

All the wires, all the stuffs

Less theoretically, all these devices are connected to a switch that trunks all these VLANs among all its ports. That switch is connected to a core router that's part of some of these VLANs and IP networks and connects everything to the upstream network and also gives me a WiFi access point I can use to connect to it.

Here's the router's configuration dashboard to show what I've done here:

How long does all this take?

Richard Kiene bragged on Twitter about how fast his installs go:

This one might take a little longer, because Triton has more services to install and configure than the old SDC that Richard was using did, but it'll still go surprisingly fast for anybody who's tried to install other data center automation and management platforms.

Installing

Before beginning anything specific to installing Triton, take a moment to Install CloudAPI, the Docker CLI, and be sure you have the sdc-docker-setup.sh script handy on your local machine.

Triton boots from a USB key. This enforces statelessness of the global zone because any changes made after booting can't be persisted back to the USB key without taking explicit steps to do so.

Get the USB key image:

curl -C - -O https://us-east.manta.joyent.com/Joyent_Dev/public/SmartDataCenter/usb-latest.tgzIf the download doesn't complete on the first try, just repeat that curl command until it's done. The -C - argument will continue the download right where it left off.

Now make a USB key with that image and boot your server with it.

Here's what the JSON for such a file might look like:

{

"config_console": "vga",

"skip_instructions": false,

"simple_headers": false,

"skip_final_confirm": false,

"skip_edit_config": true,

"datacenter_company_name": "Your Company Name",

"region_name": "dcregion",

"datacenter_name": "dcname",

"datacenter_location": "My location",

"datacenter_headnode_id": "",

"admin_ip": "172.29.0.25",

"admin_provisionable_start": "",

"dhcp_range_end": "",

"admin_netmask": "255.255.0.0",

"admin_gateway": "172.29.0.1",

"setup_external_network": true,

"external_ip": "172.19.0.25",

"external_vlan_id": "7",

"external_provisionable_start": "",

"external_provisionable_end": "",

"external_netmask": "255.255.0.0",

"external_gateway": "172.19.0.1",

"headnode_default_gateway": "172.19.0.1",

"dns_resolver1": "",

"dns_resolver2": "",

"dns_domain": "my.domain",

"dns_search": "my.domain",

"ntp_host": "",

"root_password": "password",

"admin_password": "1password",

"api_password": "1password",

"mail_to": "",

"mail_from": "",

"phonehome_automatic": ""

} There's another example here that includes the answers used for CoaL as well as some arguments to do the install completely automatically with no user interaction.

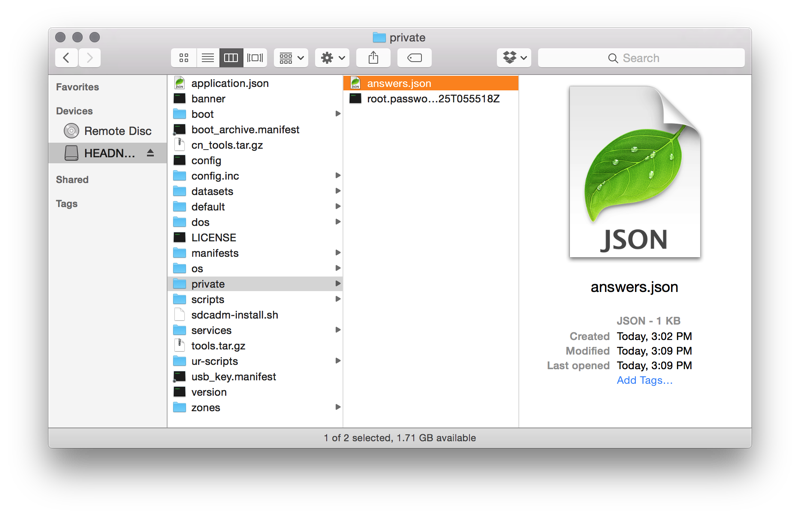

Save the file as answers.json in /private in the newly made USB stick, then cleanly eject the stick and we're ready to do the install.

The important details in that config file are the passwords and network configuration. Take special note of each of those. The networks in my case are as follows:

172.29.0.0/16for the admin network- I set the head node's admin network IP to be

172.29.0.25

- I set the head node's admin network IP to be

172.19.0.0/16for the external network- I set the head node's external network IP to be

172.19.0.25

- I set the head node's external network IP to be

Those big RFC-1918 networks give me lots of space to play with, and because few other people use the 172 network space, I can typically get the whole space to myself (though there's no risk of conflict given the network configuration I'm using).

The installer will prompt for a few things once it boots and gets going, but after the final confirmation it's all on its own until it's done. It'll reboot once or twice, and the whole thing may take 20-30 minutes in real time.

Here's a silent screencast of of that install from beginning to end:

Some other screencasts of this install that are worth noting include me doing manual entry of all the data (instead of using the answers.json file shown above), and Joyent alum Ryan Nelson demonstrating the install with with pre-Triton SDC7.

Post-install configuration

Do the following on the head node. SSH in to root@172.29.0.25 if using the IP configuration above.

sdcadm post-setup common-external-nics && sleep 10 # imgapi needs external

sdcadm post-setup dev-sample-data # sample packages for docker containers

sdcadm post-setup cloudapi

sdcadm experimental update-docker --servers cns,headnodeThe above will do some common post-install configuration, while the following will import container-native Ubuntu, CentOS, and Debian images to use with infrastructure containers:

sdc-imgadm import 0bd891a8-1e73-11e5-acf7-7704f7a4460f -S https://images.joyent.com # import container-native Linux images

sdc-imgadm import a00cef0e-1e73-11e5-b628-0f24cabf6a85 -S https://images.joyent.com

sdc-imgadm import 36c1c12a-1b7e-11e5-8f38-eba28d6b3ec6 -S https://images.joyent.comNow use these three commands to get the IPs for the various services we'll want to connect to:

vmadm lookup -j tags.smartdc_role=~adminui | json -aH nics | json -a -c 'this.nic_tag === "external"' ip # admin UI IP

vmadm lookup -j tags.smartdc_role=~cloudapi | json -aH nics | json -a -c 'this.nic_tag === "external"' ip # CloudAPI IP address

vmadm lookup -j tags.smartdc_role=~docker | json -aH nics | json -a -c 'this.nic_tag === "external"' ip # Docker API IP addressOn your local machine (this works well on MacOS or Linux), add hostfile entries for some of the services we'll be using. Your IPs may vary, but they're reported from the vmadm lookup commands we did on the head node a moment ago. This works for me, though I need to become root with a sudo -s first:

cat >> /etc/hosts << __EOF

# Triton elastic container infrastructure" >> /etc/hosts

172.19.0.26 admin.triton.mobile

172.19.0.28 cloudapi.triton.mobile

172.19.0.29 docker.triton.mobile

172.19.0.29 my.sdc-docker

__EOFOn your local Mac, point your browser to https://admin.triton.mobile (which should now be mapped to the public IP number that was returned from vmadm lookup -j tags.smartdc_role=~adminui | json -aH nics.

Create a customer account

Create a user account for yourself at https://admin.triton.mobile/users (again, your IP may vary), and be sure to enter an SSH key. You can create accounts for others too, but let's get started one step at a time.

You can also create the user account via the CLI on the head node:

echo '{

"approved_for_provisioning": "true",

"company": "Acme Brands",

"email": "email@domain.net",

"givenname": "FirstName",

"cn": "FirstName LastName",

"sn": "LastName",

"login": "username",

"phone": "415 555 1212",

"userpassword": "1password"

}' | sdc-useradm createWith those in place we can setup API access. If you don't have your SSH key in your Triton account, let's put it there now. The following command will copy the key from my laptop to the customer account in Triton (obviously, if this account was for a customer other than myself, I'd want to fetch it from a different location).

scp ~/.ssh/id_rsa.pub root@172.29.0.25:/var/tmp/id_rsa.pub

ssh root@172.29.0.25 '/opt/smartdc/bin/sdc-useradm add-key /var/tmp/id_rsa.pub' Let's also make sure that account is "approved for provisioning."

ssh root@172.29.0.25 '/opt/smartdc/bin/sdc-useradm replace-attr approved_for_provisioning true' There's no self-service way for users/customers to create accounts for themselves in Triton (the customer portal we use for the Joyent public cloud is not part of the open source release), so these steps all need to be done by an administrator.

Configure the local environment for the customer account

Set environmental vars for CloudAPI. The IP address of CloudAPI was returned in the vmadm lookup -j tags.smartdc_role=~cloudapi | json -aH nics command above, but we should be able to get to it with the cloudapi.triton.mobile hostname now that we've added that mapping.

export SDC_URL=https://cloudapi.triton.mobile

export SDC_TESTING=1 # allows self-signed SSL certs, is insecureTriton presents a Docker API endpoint that the Docker CLI or other tools can use to provision containers. The sdc-docker-setup.sh script script helps with that until https://github.com/docker/machine/pull/1197 is merged and released.

~/sdc-docker-setup.sh -k -s cloudapi.triton.mobile ~/.ssh/id_rsa That will output two sets of instructions. The default is to turn of TLS verification, but I want to use the suggestions to enable TLS. The following will update the environment var script to do that.

head -5 ~/.sdc/docker//env.sh > ~/sdc-docker-env.sh

echo 'export DOCKER_HOST=tcp://my.sdc-docker:2376' >> ~/sdc-docker-env.sh

echo 'export DOCKER_TLS_VERIFY=1' >> ~/sdc-docker-env.sh And now I can source the environment var script and test Docker!

source ~/sdc-docker-env.sh

docker infoAlright, let's try something for real...

docker run --rm -it ubuntu bashOh noes! Error:

FATA[0463] Error response from daemon: (DockerNoComputeResourcesError) No compute resources available. (19f32bc2-9b06-4e6b-a642-97e9c4a7c30e)I can see the error details by looking at the CNAPI logs. Starting from the head node, try:

sdc-login cnapi

grep -h snapshot `svcs -L cnapi` /var/log/sdc/upload/cnapi_* \

| bunyan -c this.snapshot -o bunyan --strict \

| tail -1 \

| json -ga snapshot \

| while read snap; do echo "$snap" | base64 -d | gunzip - | json ; doneThe thing to look at in there is the list of steps it went through, filtering out nodes on which to run the Docker container. We only have the one node, the head node, so thats its UUID we see in the list almost all the way to the end:

"steps": [

{

"step": "Received by DAPI",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Servers which have been setup",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Servers which are currently running",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Servers objects which are valid",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Servers containing VMs required for volumes-from",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Add VMs which have open provisioning tickets",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Calculate localities of owner's VMs",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Servers which are not reserved",

"remaining": [

"80a1abd2-d434-e111-82ac-c03fd56f115a"

]

},

{

"step": "Servers which are not headnodes",

"remaining": []

}

]The last step is where it failed because it couldn't find any usable nodes. We typically[^1] can't provision containers on the head node, so we need to add a compute node before we really get started.

Add a compute node

Adding a server is almost as easy as plugging it in. Once it net boots it will join the Triton data center. Go to https://admin.triton.mobile/servers and you should find the server there waiting to be accepted into the data center and get final configuration. Most of the configuration is automated, but one step that isn't is adding all the NIC tags. I want this compute node to have access to the external network, so I've added that tag.

Now let's try that docker run again...

docker run --rm -it ubuntu bashOh yeah! We're in a container, let's run htop and see what CPUs it sees:

apt-get update -y && apt-get install -y htop && htopOne container isn't enough, though. Let's make lots more:

docker pull nginx

for i in {1..16}; do \

docker run -d -m 128m -P --name=nginx-demo-$i nginx & \

sleep .7; \

doneLet's make a request from every one of those containers:

docker inspect $(docker ps -a -q) | \

json -aH -c '/^\/nginx-/.test(this.Name)' NetworkSettings.IPAddress | \

sed -E 's/(.*)/http:\/\/\1/' | \

xargs -n 1 curlMake more containers!

Infrastructure containers are an elastic, bare metal alternative to hardware virtual machines. Let's create one running Couchbase:

curl -sL -o couchbase-install-triton-centos.bash https://raw.githubusercontent.com/misterbisson/couchbase-benchmark/master/bin/install-triton-centos.bash

sdc-createmachine \

--image=$(sdc-listimages | json -a -c "this.name === 'centos-6' && this.type === 'smartmachine'" id | tail -1) \

--package=$(sdc-listpackages | json -a -c "this.memory === 2048" id | tail -1) \

--networks=$(sdc-listnetworks | json -a -c "this.name ==='external'" id) \

--script=./couchbase-install-triton-centos.bashThis is slightly modified from my instructions for doing this in the public cloud because some of the package and network details are different. Use sdc-getmachine sdc-listmachines` or look in the dashboard to get the IP of this infrastructure container.

Confirm that everything is installed:

curl -sL https://raw.githubusercontent.com/misterbisson/couchbase-benchmark/master/bin/install-triton-centos.bash | bashCreate some load:

curl -sL https://raw.githubusercontent.com/misterbisson/couchbase-benchmark/master/bin/benchmark.bash | bashYou want real SQL, though? Let's install PostgreSQL. This time we'll start with Ubuntu rather than CentOS:

sdc-createmachine \

--image=$(sdc-listimages | json -a -c "this.name === 'ubuntu-14.04' && this.type === 'smartmachine'" id | tail -1) \

--package=$(sdc-listpackages | json -a -c "this.memory === 2048" id | tail -1) \

--networks=$(sdc-listnetworks | json -a -c "this.name ==='external'" id)Look in the dashboard or use sdc-listmachines to get the IP of this infrastructure container and ssh in, then install Postgres:

apt-get update -y && apt-get install -y postgresql-contrib postgresql htopCongratulations, you just containerized Postgres!

su - postgres

i=0; while true; do date; time pgbench -i -s 19 -c 7 ; echo "Iteration $i complete --- --- --- --- ---" ; ((i++)); doneA note about terminology

Joyent has been running containers for nearly ten years, but for most of that time people thought in terms of virtual machines, so the old SmartDataCenter used that language when describing containers. You'll see "VM" and "machine" throughout the admin UI, on the command line, and in the APIs, when what is really meant is "container" or "instance." Similarly, you'll see "sdc" in many places, especially in various commands. Keep that in mind as you work with Triton and you'll do well.

This is probably also good time to describe the three types of compute that Triton supports:

- Docker containers running your choice of images from public and private registries

- Infrastructure containers running container-native Linux and SmartOS, and the software that runs on those. Canonical has certified Ubuntu in Triton infrastructure containers, and other distributions are also available.

- Virtual machines running KVM inside a container to support Windows, as well as legacy or special purpose operating systems

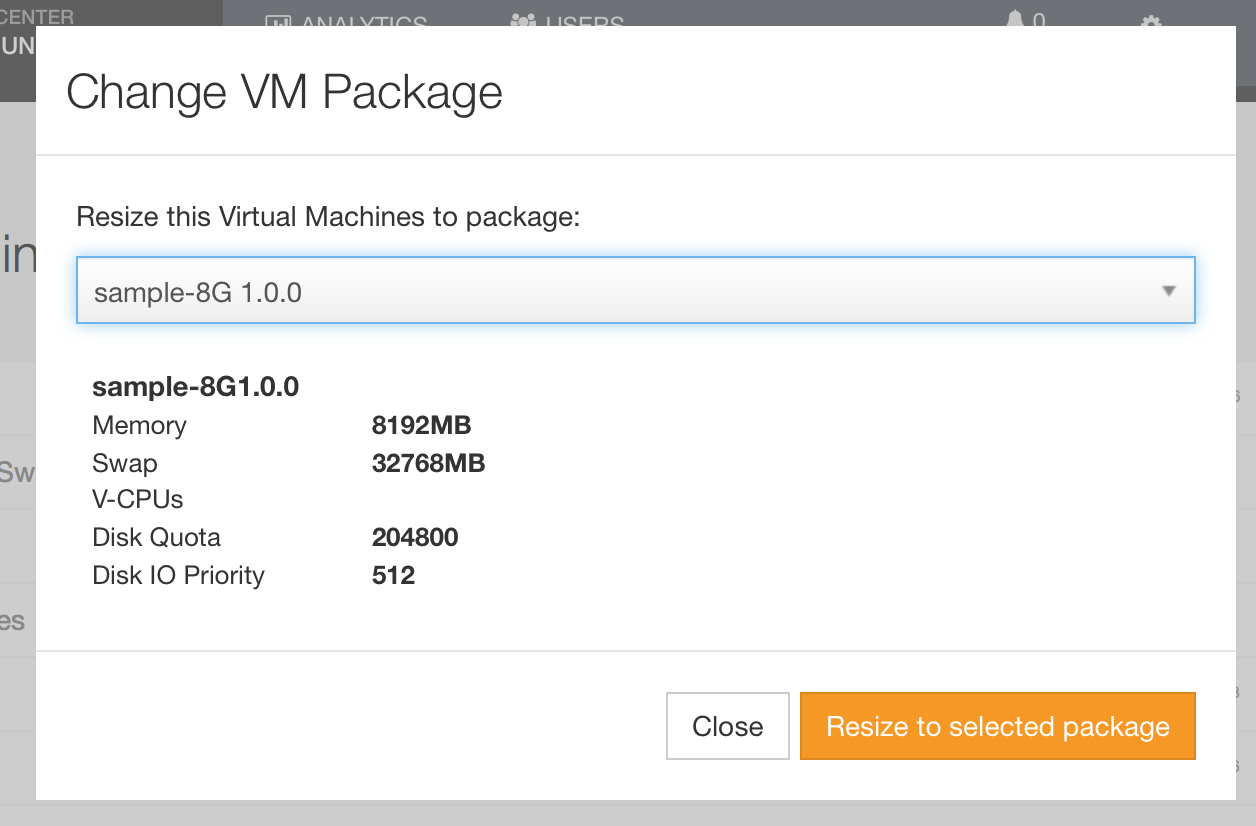

Resize a container!

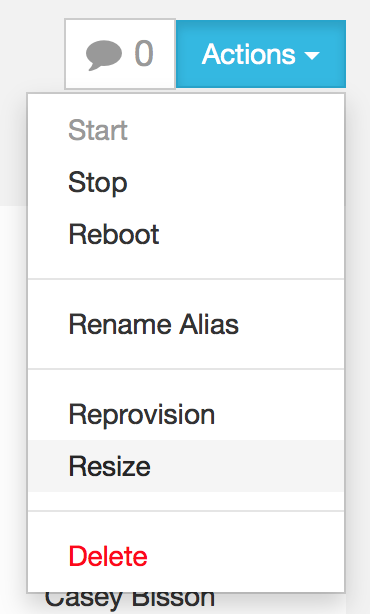

Unlike VMs, containers can be resized on the fly, without rebooting them. This allows us to add more RAM, compute, or storage as needed. We can do this using the ops portal, or via CloudAPI.

To do this with CloudAPI, let's fist figure out what container we'll resize from a list of all our containers:

sdc-listmachines | json -a primaryIp id memory nameFrom that list I'm picking an infrastructure container to resize using the command below:

sdc-resizemachine --package=$(sdc-listpackages | json -a -c "this.memory === " id | tail -1) Be sure to fill in the package and container details.

Inside the contain I can do a free -m to see the memory information and confirm that it's been resized.

Somebody here asked for DTrace?

Here it is. Start by adding /native to your path:

export PATH=$PATH:/native/usr/bin:/native/usr/sbin:/native/binNow pick one of the containers we created earlier that's doing some work, then look at what's running using prstat:

prstat -mLcNow, for DTrace, try this:

dtrace -n "syscall:::entry

{self->syscall_entry_ts[probefunc] = vtimestamp;}

syscall:::return/self->syscall_entry_ts[probefunc]/{@time[probefunc]

= lquantize((vtimestamp - self->syscall_entry_ts[probefunc] )/1001,0,5, 1); self->syscall_entry_ts[probefunc] = 0;}"Let that run for a bit while the container does some work, then ctrl-c and see what you get.

Handling updates

Updating Triton is easy too. There are two different areas to update: the services and the platform image (PI). The services can be updated without a reboot. Each service goes offline for a moment while it's being updated, but a platform image update requires a reboot.

Updating the platform image, on the headnode:

sdcadm self-update

sdcadm platform install --latest

new_pi=$(sdcadm platform list -j | json -a -c 'latest==true' version)

sdcadm platform assign "$new_pi" --all

sdc-cnapi /boot/default -X PUT -d "{\"platform\": \"$new_pi\"}" > /dev/nullThat will assign each node to boot on the new platform image on its next reboot, but it won't trigger a reboot. You can then schedule reboot of the different nodes as needed and suits your uptime needs.

Updating the services is easier:

sdcadm self-update

sdcadm update --all -yTotal reset

I've been going through this script a few times myself, so I've had to reset everything and try it all again to test it. Here's how I do that:

- Boot each node into recovery mode, login as root, then do a

zpool import zonesandzpool destroy zones - Remove the

configfile from the USB key - Remove any relevant

known_hostsentries from your local machine

[^1] We can allow customer provisioning on the head node, but that's not advised. If you really must do it, sdcadm post-setup dev-headnode-prov will make it work.