Enterprise Native Mobile Apps with Node.js & Containers (Synchro Labs)

December 21, 2016 - by Bob Dickinson, President and CEO, Synchro Labs

Building high-quality, native mobile apps can require significant time and cost, and require skill sets that enterprises don’t have in house. The resulting apps can be difficult to manage, update, and secure.

While many organizations have deployed their “hero” customer-facing mobile app, very few have made progress migrating their in-house enterprise and line-of-business apps to mobile.

Microservices built with Node.js were already being used as primary mobile backend APIs. However seamless integration between native frontend and backend apis has been challenging and time consuming. On the flip side, full stack JS solutions (like MEAN) lacked the native optimization needed for mobile apps.

In this case study, we showcase a path breaking solution from Synchro Labs for building enterprise ready native mobile apps, leveraging JavaScript, Node.js and Containers as the leading technologies and deployed on Joyent’s Triton Container Native Cloud for high performance and cost optimization.

The Cloud-Based Mobile App Platform for Node.js

The Synchro platform allows enterprise developers to create high quality, high performance, cross-platform native mobile applications using simple and familiar tools - JavaScript and the Node.js framework. Apps created with the Synchro platform install and run natively on mobile devices, but all application code, including client interaction logic, runs in the cloud under Node.js.

The Synchro Solution

Synchro offers a solution that makes it easy for either front-end web developers or back-end Node.js developers to use their existing JavaScript/Node.js skills to build mobile client apps that present as native apps to end users, but are deployed and managed like web apps.

With Synchro, you get:

- High quality native mobile client apps

- Very short learning curve

- Significantly less code to write/manage

- Applications can be updated in real time

- Security is baked-in/automatic

What Makes Synchro Unique?

There are many options in the mobile app development platform space, including HTML5 based solutions, Phonegap/Cordova solutions, Appcelerator, Xamarin, React Native, Ionic, and others.

The main difference between Synchro and all of these technologies, is that with Synchro your mobile application code lives and runs on the server. That gives Synchro tremendous advantages in ease of integration, developer productivity, manageability, and security.

Technology stack

Synchro solutions consist of a Synchro Native Client app or apps, and a set of server technologies referred to collectively as Synchro Server. The Synchro Server core technology stack consists of Node.js, the Synchro Server Node.js app and related services, and a set of Node.js packages used by Synchro. Please read more about our technology choices here

Solution Architecture

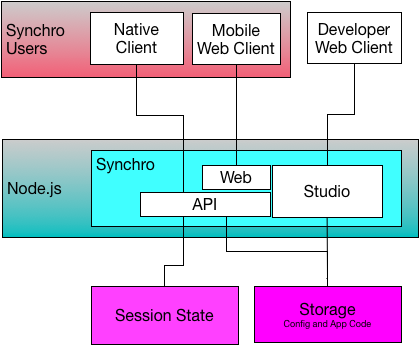

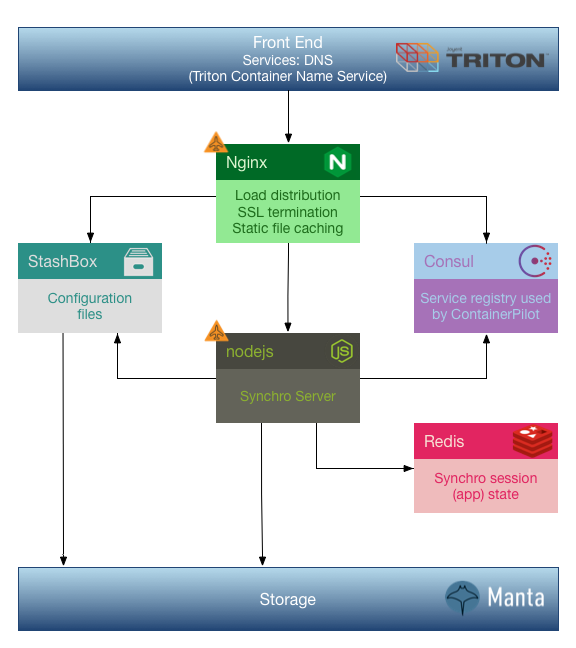

The diagram below represents the major components of the technology stack and their relationships to each other:

While this diagram shows the major architectural elements, it does not address operational or deployment considerations.

For example, the Synchro Server App running under Node.js will typically need to scale to more than one instance, meaning that it will need to sit behind a load balancer of some kind, and be controllable by an orchestration/scheduling solution of some kind.

As a solution vendor, it is of paramount importance that our solution be able to run in any deployment environment or solution architecture required by our customers. The internal support for a variety of session state and storage solutions is a good example of the kind of flexibility that has been engineered into Synchro. We show those generally as “Session State” and “Storage” in the diagram above, but those will typically connect to either platform services provided by your managed service platform, or to containers that you provide in a container-based solution.

Deployment considerations and reference architectures for deployment will be discussed below.

Managed Services Deployment

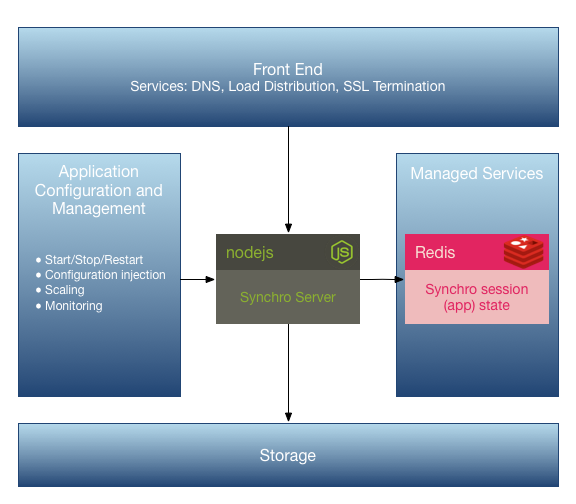

We originally deployed our own production Synchro server on Microsoft Azure as an Azure Web Service, and we relied on various managed services available within the Azure environment for everything else. Here is what our production server deployment looked like on Microsoft Azure:

While this solution was workable, it had some downsides. Among other things, we had a lot of DevOps issues, and it was very difficult to establish and maintain staging environments for test or dev (it could be done, but it wasn’t fun).

We knew that a container-based solution would be attractive to customers, and we wanted it for ourselves so that we could have some platform mobility for our own production Synchro server.

Containers 1.0 - Basic Container Support

Back in February we blogged about Deploying Synchro using Docker. At that time we created a Dockerfile which we began bunding in our installation to make it easy to package an installed, configured Synchro installation into a Docker image.

That Dockerfile looked like this:

FROM node:argon

## Create app directory

RUN mkdir -p /usr/src/app

WORKDIR /usr/src/app

## Bundle app source

COPY . /usr/src/app

## Install deps

RUN npm install

RUN cd synchro-apps && npm install

ENV SYNCHRO__PORT 80

EXPOSE $SYNCHRO__PORT

CMD [ "node", "app.js" ]While this was a good start and got us experimenting with running Synchro in a container, it was a long way from a complete solution. We decided the best way to arrive at a true container-based solution was to eat our own dog food by deploying our own production Synchro API server in containers.

Containers 2.0 - Self-Orchestrating Microservices

When we started the process of containerizing our production API server, we quickly learned that there is a lot more to containerizing a solution than just wrapping your app in a Docker image. Our app, like many apps, runs as one part of a system of interconnected services. When running in a pure managed environment (like Azure), those services are provided for you, and your interface to/from those services abstracts you from their implementation/operations. When running an orchestration of micro-services in containers, you are responsible for the entire solution (creating, configuring, and running all of the micro-service containers and connecting them to each other).

Orchestration solutions, like Docker Compose, Mesosphere Marathon, Google Kubernetes, and others, provide tools to support these complex container deployments and interactions, and guidance for modifying your application to support that tooling. But as a solution provider ourselves, we were looking for a solution that supported whichever orchestration platform our customers had chosen, with little or no further integration required.

We had two main goals in undertaking orchestration support:

- Provide a true container-based reference architecture for our customers/users that works with any orchestration platform

- Use that support ourselves to migrate our own production API server from a proprietary, vendor-specific managed service deployment to a open standards, portable, container-based deployment

The AutoPilot Pattern using ContainerPilot

Our friends at Joyent made us aware of a solution that they promote called The AutoPilot Pattern using ContainerPilot, which is well documented here. Note that while Joyent actively promotes and supports this solution and its components, it is not Joyent-specific (everything involved is open-source and will work on any platform that can run containers).

This solution delivers "app-centric micro-orchestration", where containers collaborate to adjust to each other automatically (both as containers scale, and as they become healthy/unhealthy), without needing any help from your orchestration system. These containers run on "auto-pilot".

All the orchestration solution / scheduler has to do is run your containers, and the containers themselves will self-organize. As a solution provider ourselves, this features was a must-have. Our customers can deploy our AutoPilot containers, from publicly published images, with no changes, regardless of the orchestration system they use, and everything will just work. And if a customer changed from one orchestration system / scheduler to another, no changes would be required to any Synchro micro-service Docker images.

Synchro AutoPilot Reference Architecture

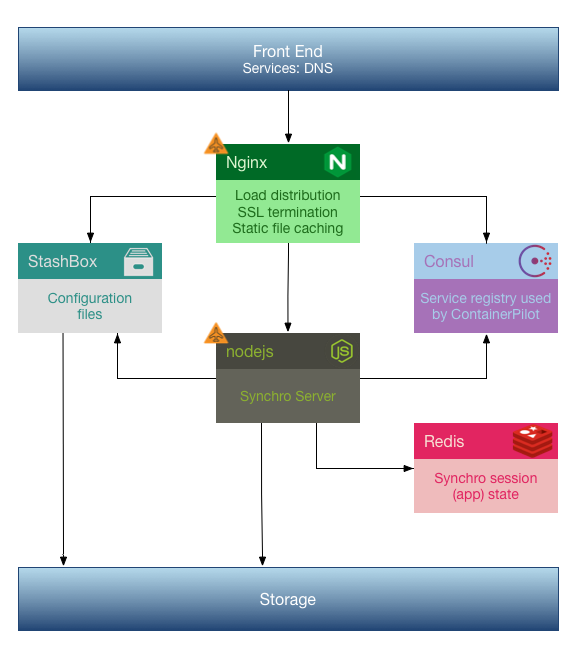

The Synchro AutoPilot reference architecture is based on Docker and ContainerPilot:

Docker: All services running in the reference architecture run in Docker containers

ContainerPilot: Allows the system to scale automatically regardless of orchestration solution (AutoPilot pattern)

In a container-based solution we have to specify and provide all services required to run the solution. The only solution element that we require and for which we do not provide a container is storage (all supported platforms provide storage as a service).

The reference architecture includes the following Docker containers:

Node.js: The Synchro Server itself runs as a Node.js service

Redis: Application session management (end-user app state)

Consul: Service registry

Nginx: Load balancing, SSL termination, static file caching

StashBox: Configuration support (env, file system, or storage)

Implementing Micro-Orchestration

The first step in our process was to build a Docker container for our app, and to either create or find Docker containers for the other elements of our solution.

We then built a deployment blueprint that described all of the containers and their relationships to each other. We chose docker-compose to do this, but any scheduler would work here.

Finally, we identified the containers that needed to scale and added ContainerPilot to those containers. In our case, the Nginx proxy and our Synchro Node.js containers were the only containers that needed to scale in order for our solution to scale.

Our fully containerized solution looks like this:

Note that we will always run two or more Nginx and Synchro containers, and we will run Consul in a raft of three containers, and Redis in a cluster of three containers, so a minimal deployment will consist of ten containers, all working together (automatically). And of course we can scale out Nginx or Synchro as needed to handle load and the system will handle that automatically.

We aren't going to cover the implementation details of the AutoPilot Pattern using ContainerPilot, as Joyent has excellent documentation and many implementation samples. If you would like to see our implementation, we have published it on GitHub and you are free to reference it and use it as desired.

Container Best Practices

We learned some lessons when implementing our apps and services in containers. If you undertake a similar effort, you should consider the following...

Flexible Configuration

Getting configuration into micro-services is one of the significant challenges in these types of solutions. With Docker containers, we have the option to build configuration into the container itself using environment variables or bundled files, or we can inject configuration at runtime using environment variables or Docker volumes. There are pros and cons to each of these mechanisms. As a solution provider, we want our own solution to be non-opinionated, which is a fancy way of saying that we want to support any of these mechanisms (including combinations).

To support the broadest range of configuration options, we recommend the following:

- Support configuration via environment variables where possible - In order to support flexible configuration, support environment variables as broadly as possible.

- Any file-based configuration should have a configurable local path with a reasonable default - This is particularly important if you intend to inject configuration using Docker volumes. Having a configurable local file path allows you to map volumes to locations where they will not interfere with other configuration elements or potentially leak other local files from the container to the volume unintentionally.

- Any file-based configuration should be able to get its contents from a base64 encoded environment variable - It can be tricky to pass binary contents via environment variables, and even for multi-line text files, the differences between the support of Docker command line, the Docker env file, and Docker Compose make this more complex than it needs to be. Supporting base64 encoded environment variables is easy to do and works in all modalities of environment configuration (whether build-time or run-time).

ContainerPilot makes is very easy to do the above, as well as supporting flexible configuration in general, with its preStart entrypoint. Following is an example of the ContainerPilot preStart in our Nginx container:

#!/bin/sh

preStart()

{

: ${SSL_CERTS_PATH:="/etc/ssl/certs/ssl.crt"}

if [ -n "$SSL_CERTS_BASE64" ]; then

echo $SSL_CERTS_BASE64 | base64 -d > $SSL_CERTS_PATH

fi;

# >>> Process ssl.key, any other files as above

# Rest of preStart logic (consul-template, etc)

}With this kind of configuration, we can do any of the following:

- Copy the ssl.crt file into our image at the default location with no environment variables (build-time)

- Populate the ssl.crt file from an environment variable (build-time or run-time)

- Supply the ssl.crt file from a Docker volume to a custom location (run-time)

Embrace the Proxy

Solutions consisting of micro-services will typically require a proxy server. We happen to use Nginx as our proxy. It is not unusual for comparable implementations to use HAProxy.

While managed services solutions provided much of this functionality for you, micro-service solutions often require you to do some or all of the below:

Load distribution

The primary function of the proxy is to distribute traffic among your services. ContainerPilot, through its use of Consul and consul-template, more or less handles this part for you.

If you have session affinity requirements (as Synchro does), you want to make sure your proxy configuration accommodates those. With nginx we use ip_hash for session affinity.

SSL termination

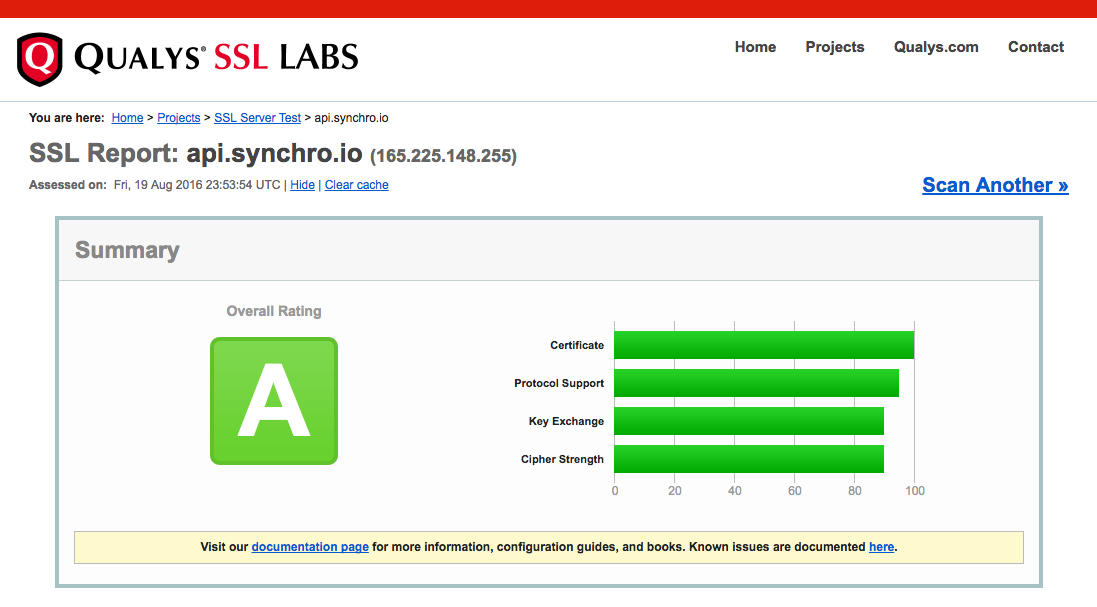

Another significant function of the proxy in micro-container orchestrations is to terminate SSL connections. This requires basic SSL/TLS configuration, as well as injection of SSL credentials into the proxy container. While this can be a cumbersome process, it does allow you to tune your SSL implementation for performance and security.

When you have configured SSL successfully, you should run an external validation test like the one from SSL Labs.

You will also likely want to forward non-SSL traffic to your SSL endpoint.

Static file caching

In our managed services deployment, we served all static files from a CDN (Content Distribution Network). Because we did not want to rely on target environments having CDN support, we instead implemented static file caching in Nginx (with support from Synchro). We found the performance to be comparable to a CDN, and the deployment/maintenance complexity to be much lower with this approach.

Websocket support

Not all proxies that support http traffic will support WebSockets out of the box. Nginx in particular requires specific configuration to support WebSockets.

Proxy Config in Practice

Our Nginx configuration template does all of the above. See: Synchro AutoPilot nginx.conf.ctmpl on GitHub

Prepare Your App

You application may require some modification in order to be well-behaved in a ContainerPilot deployment:

Health check

You should implement a health check if your app does not already have one. We recommend that the health check endpoint be configurable in your app. We also recommend that you take care not to expose the health check endpoint unintentionally (your app can check to see if the connecting host is the local machine, or you can have your proxy deny access to the health check from external clients, as appropriate).

Signal handling

Your app should implement signal handling in order to be well behaved (and responsive) in an orchestrated/scheduled environment. The Node.js default handlers, in particular, are not well-behaved. You should handle:

- SIGINT - Shutdown

- SIGHUP - Reconfiguration (if your app can reconfigure itself, such as may be required by ContainerPilot if your app uses resources that may change over its execution)

Static file cache support

If your app serves static files, it probably already has a caching support implementation in place. Since our app previously relied on a CDN, we had to add static file caching support (our proxy now handles the cache, but our app still had to write the appropriate cache headers).

Our New Reference Architecture: Synchro AutoPilot

The Synchro AutoPilot solution discussed above is now our recommended reference architecture. As previously noted, the implementation is available on GitHub. You are free to review, use, and modify it as desired. We have also published the images from our solution on DockerHub. Note that our own production API servers use these exact images. If you are deploying Synchro in a container environment, we recommend that you use these same images.

Deploying On Joyent

Once we came up with our new container-based reference architecture, we needed to migrate our own production Synchro API servers to a container-friendly cloud service provider. We chose Joyent.

Why Joyent?

Joyent is a very natural fit for Synchro, both as a home for our public API server, and as a hosting solution for customers deploying Synchro to support their own mobile apps. While Joyent excels in many areas, they are uniquely ahead of the pack in:

- Node.js leadership

- Docker integration

- Native containers (best price/performance)

- Thought leadership (ContainerPilot is a great example)

Joyent Deployment: How To

If you have Docker installed on your local machine and you have a Joyent account, it’s very easy to deploy containers to the Joyent cloud.

First, install the Triton CLI tool, then configure Docker for Triton. Once you’ve done that, you can use the standard Docker CLI commands, including docker-compose, to manage containers on the Joyent public cloud exactly like you were running them locally. To deploy our production Synchro API server solution, we simply did:

docker-compose upAnd our service was up and running on the Joyent cloud. You can then use either Docker commands or the Joyent public cloud portal to monitor and manage your containers.

The final step in our case was to enable Triton Container Name Service (at the Joyent account level, via the portal), then configure our Nginx proxy instances with the appropriate tags/labels.

Our deployment on the Joyent cloud looks very similar to our standard reference deployment:

Synchro Labs Production Deployment on Joyent

The Synchro public API server is currently deployed on the Joyent cloud via Triton and Manta, using the reference architecture discussed above. This deployment supports the Synchro Civics application (accessible from the Synchro Civics mobile app available in all app stores). It also serves Synchro Samples, which allows customers to evaluate various aspects of the Synchro platform using the Synchro Explorer mobile app (also available in all app stores).

Comparing Solutions: Azure Managed Service vs Triton Orchestrated Containers

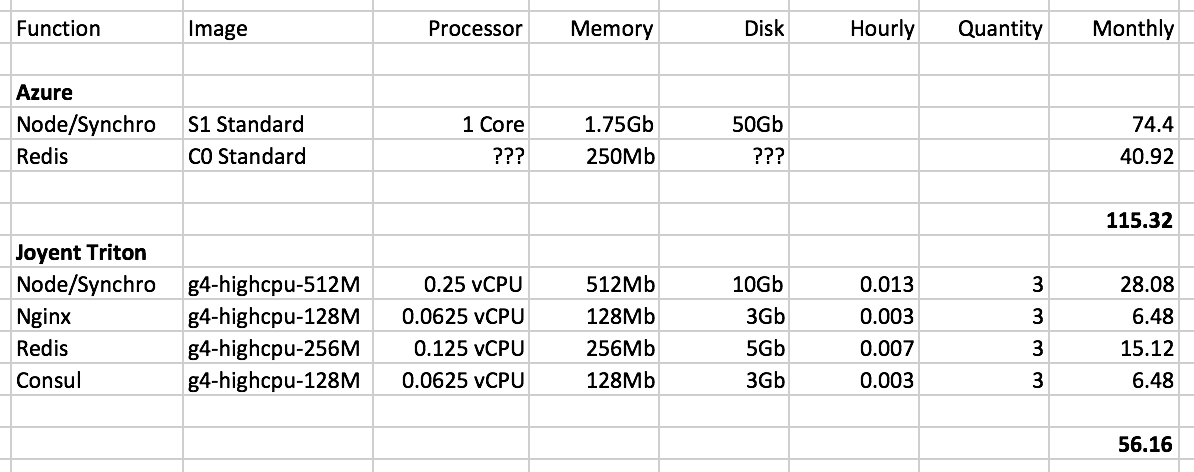

Cost

Note: Our own Synchro API server doesn’t operate at a large scale or rack up a lot of cost (it only needs to support a few thousand simultaneous users). That being said, our cost calculations are provided here.

We were initially concerned with the cost of running all of the containers that our new solution required. Below we show the cost of our previous managed solution versus the cost to run our new orchestrated container solution on Joyent Triton:

You can see that even with running 12 containers in our baseline/minimal containerized configuration, our Triton cost is less than 50% of our previous Azure solution that only had a single managed app container.

That being said, the pricing is not apples-to-apples. With Azure, there are many arbitrary limitations put on your instances that force you to buy capacity that you don't need. For example, we did not need nearly as much memory or processor as the "S1 Standard" image costs on Azure, but the smaller images that would have been more appropriate had arbitrary limitations that made them unusable (poor networking, maximum number of instances that preventing scaling, etc).

So part of the cost improvement is related to being able to pay for exactly the right size container on Triton (they provide a very wide variety of container profiles with no arbitrary limitations). But part of it is that Triton is just less expensive (the Triton "g4-general-4G" is comparable to the Azure "S1 Standard" except that it has more than twice as much RAM, and it's only 65% of the cost).

Performance

We have not done any detailed end-to-end performance testing. We did do some initial smoke testing, and we profiled some specific areas of concern (our app is very dependent on low-latency, and on fast intra-solution transactions between the app and Redis). For everything that we did test, we saw marked improvement in performance. And our app "feels" faster, for whatever that's worth.

We can say with some confidence that the performance didn't get worse, and particularly with the cost improvement, we would have been happy with that.

Service and Support

In using Azure for the past year plus, we have suffered from being a small customer dealing with a giant service provider. We didn't run into too many issues, but when we did, support was non-existent. The model is essentially that to get reasonable support you either have to be a very large customer, or you have to subscribe and pay a non-trivial amount for the right to access support. This was not much different with our use of Amazon AWS services in the past (except that Amazon had much better self-support and peer-support, so it was easier to solve your own problems if you were willing to put in the work).

Our experience with Joyent has been a breath of fresh air. We had a couple of operational issues with our account provisioning and the support tickets were handled almost immediately with a high level of professionalism/competence. We opened a couple of GitHub issues against ContainerPilot and got thoughtful feedback from the maintainer (a Joyent employee). We even asked in advance if we could get a technical backstop in case we ran into porting issues, and an SE was assigned to us (and they knew in advance that we weren't going to be spending a lot of money with them in the near term). Joyent just seemed top-to-bottom to be committed to making sure we were successful.

DevOps Benefits

Environmental: A major, unexpected benefit of moving to a orchestrated container solution is that we can now simulate our production environment in development with a high degree of fidelity, and we can actually directly use our production configuration and state from staging environments before deployment. For example, we can set up a deployment environment that points at the Joyent Docker daemon for production, and set up the exact same environment (same directory, same environment variables, etc) pointing to a local Docker daemon, allowing us to create the exact same Docker environment for local/dev or remote/prod deployment. This is something we couldn't ever really do with any confidence on Azure.

Portability: Because ContainerPilot is open source and will run anywhere containers will run, and because all of the elements of our solution now run in containers, we could port this solution to another provider very quickly and easily (it took two weeks to port from Azure to Joyent, but we're confident we could redeploy our current solution on another provider in matter of hours at most). We are currently using Docker Compose as our scheduler with Triton, but again, we could change to a different orchestration solution / scheduler, even on a different cloud provider, very easily and with a high degree of confidence.

Summary

We are very happy with our new AutoPilot implementation of Synchro and its components. It has helped us consolidate our application state and move to a production environment that is a much better fit for us (in terms of cost, performance, tooling, environmental fidelity, and other factors).

We are confident that our AutoPilot support will make it easier for customers deploying Synchro to integrate it into their operating environments, regardless of their cloud provider or orchestration solution. And it means that we are now able to offer a reference architecture that is a true turn-key solution, instead of just an "integrate it yourself" Docker container.

Appendix

Synchro Technology stack

Synchro solutions consist of a Synchro Native Client app or apps, and a set of server technologies referred to collectively as Synchro Server. The Synchro Server core technology stack consists of Node.js, the Synchro Server Node.js app and related services, and a set of Node.js packages used by Synchro.

Synchro Native Client

Synchro has native clients on the iOS, Android, Windows Mobile, and Windows platforms. There is a general purposed version of the Synchro native client called Synchro Explorer, which will run a Synchro app running at any provided endpoint.

When a customer is ready to deploy their own branded native mobile app to end users, they supply their app name, icon, and server endpoint to the Synchro App Builder. The resulting app can then be distributed via app stores or any other desired mechanism. Note that this app contains no customer application code (only a reference to the server endpoint where that code is running).

Node.js

Synchro Server and its components run under Node.js. In addition, apps written using Synchro also run under Node.js. This allows Synchro app developers to build mobile apps with full access to all of the Node.js packages they know and love.

Synchro Server App

A top-level Node.js server application that distributes requests to one of the following support services:

- Synchro API - Core Synchro app server for Synchro apps

- Synchro Web - Web client for Synchro apps

- Synchro Studio - Web-based IDE for Synchro apps

Note: Synchro Web and Synchro Studio are both optional.

Additional Services

Synchro also relies on Session State and Storage support, typically from external services (whether provided by the environment, or deployed as separate servers/services as part of the Synchro deployment).

Key Supporting Node.js Packages

Asynchronous Operations: co and async - provide support for

asynchronous operations, both of Synchro itself, and of Synchro apps

Storage: pkgcloud (access to storage on Amazon S3, Azure, Google,

HP, OpenStack - including IBM BlueMix, and RackSpace) and manta (access

to Joyent Manta storage)

Session State: redis - access to Redis for application session

management

Logging: log4js - provides logging services from all Synchro

components and services, highly configurable